Introduction

Recently, Cujo and Adversa hosted a really interesting contest for who could best fool a machine learning models. One of the two tracks was specifically about running so-called adversarial attacks on a facial recognition system, which I happen to have experience with. It turns out that my entry won the first prize valued at $3000 dollars by a healthy margin, so today I am going to go through my approach. You can even run the same attacks yourself using my code.

The Challenge

We are given 10 pictures of celebrities that an ML system has been trained to recognize correctly and our task is to change the ML system's prediction from each of these celebrities to each of the others. This means that we have to submit a total of 90 modified images. The confidence in the desired prediction is our score, which means that the best possible score would be 90. Crucially, we do not know the exact model that is used for the classification, but can only query the model on specific examples using an API. This is what we usually call a "black-box attack" because we cannot open up the model to see what's going on inside.

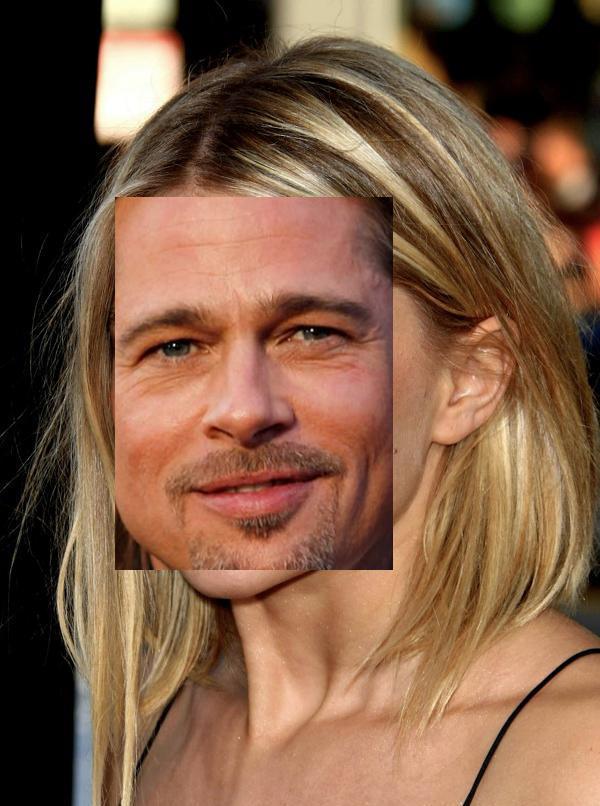

Of course, the obvious way to make a model predict "Brad Pitt" is simply by sending a picture of Brad Pitt instead of whatever celebrity we are starting from. But of course we aren't allowed to do that because the challenge also tracks a "stealthiness" score that judges how different our submitted image is from the original. All images have to receive a stealthiness score of 50% or higher in order for the attack to actually count. If we send a completely different image then our stealthiness is always just 0.

Fair enough, so then how about we don't replace the entire image, but instead just the important part? I used an open-source face detector to cut out a bounding box around the target face and paste it smack in the middle of the source face. This might well distort the target face a little bit if the two bounding boxes have slightly different shapes but we can worry about that later.

Stealthiness: 27%

Success: False

The next thing we might wonder about is if we really need to paste in the face completely or if we can leave it slightly transparent so that the image is not changed quite as drastically. We can try lots of different opacity values and see if there is one that has high confidence and high stealthiness.

We can see that we have a sharp transition from very low confidence to 99% confidence when only slightly changing the opacity value.

Stealthiness: 34%

Success: False

We are definitely making progress but it's not good enough yet. The attack is clearly not "stealthy" enough. The most noticable feature appears to be the boundary of the crop so naturally we can try smoothen this out a little bit. This introduces some new parameter for how gradual the transition between the two images should be. For now, we will just fix this parameter to some arbitrary number and see what happens. Here I am using 50 pixels (that means it takes 50 pixels to linearly move from zero opacity to maximal opacity) and then again I again interpolate through the maximal opacity. It turns out this way we finally hit our first successful image.

Stealthiness: 51%

Success: True

Drunkenly stumbling to Success

Now that we have a successful starting point, we should try to increase the confidence as much as possible without dropping the stealthiness below the magic 50% mark. But, actually, there were a ton of arbitrary paramaters besides the opacity that we didn't even try to optimize. The smoothing parameter, of course, but also the exact position, width and height of the crop in the source and target images. All together this makes for a total of 10 parameters that we could play around with in order to optimize our score. The simplest way of doing this would be a grid search, i.e. we simply pick a bunch of values for each parameter and then try every possible combination. With only 10 values for each parameter and with queries taking on average 2 seconds, this would take over 600 years. Probably not the way to do it...

Clearly, our search for the best parameters has to be a little more strategic. We can start at the point that we found by linearly going through the opacity values and then start moving all paramaters randomly. Every time we successfully improve our score, we accept the step and if don't improve then we simply try a different step instead. These random steps can be noise (in my case Gaussian) multiplied by some parameter-specific step size. After many steps we can drop all step sizes so that the algorithm starts to more carefully explore the neighborhood around the best point found so far.

Exactly what should be the score function for this though? After all we need to pay attention to both the confidence and the stealthiness. Well, the idea is to always optimize for high confidence under the constraint that the stealthiness is higher than 50%. As long as the stealthiness is high enough I therefore return a value that only depends on the confidence and that is higher than any score that could be reached with a lower stealthiness. However, if the stealthiness is too small then I maximize the sum of stealth and confidence. There are a few more hacks in there, for example, if we ever achieve 100% confidence we want to only optimize stealth under the constraint that confidence remain at 100%. If this all sounds a bit cryptic, the complete function is shown below.

if confidence<0.01:

return -1 + stealth

elif confidence + stealth < 1:

return confidence

elif stealth<0.5:

return confidence + stealth

elif conf<1:

return 2 + confidence

else:

return 2 + confidence + stealth

With this method in place, we can now start attacking one pair after another using hundreds or even thousands of queries. You can see the result of such a random search in the image below. The final confidence is extremely high. The strange color artifacts come from the fact that on some attacks I allowed the opacity to take on values bigger than 1.

Stealthiness: 51%

Success: True

Refining the Attack

Within less than a day, this approach had put me at the top of the leaderboard at a tentative 89.98 (out of 90). However, secretly I already had much better results stored away that would get me to 89.999 and slowly keep improving by running more and more queries. But if I was hiding better results until the last day of the challenge, wasn't everybody else probably also doing that? I had to push further.

After experimenting with additional parameters in the original attack (like allowing brightness, contrast and saturation to be changed and placing colored dots in the image) and seeing diminishing returns there I decided to write a second attack. The basic idea is exactly the same as before, i.e. pasting one face onto another. Since maybe the rectangular bounding box picks up too much unnecessary background, what if we cutout the face exactly. Using a public face segmentation model we can find a pixel-exact mask of the regions we want to copy.

Now we can paste the faces into the images at arbitrary scale, rotation, opacity etc. which again spans a parameter space that the random walk can explore. It turns out that for some image pairs, this method is more effective at finding high confidence points, even if the results might seem very invasive to a human.

Stealthiness: 71%

Success: True

Pushing the Limits

With the second attack I was slowly but surely pushing the scores of more and more image pairs to their limits. A whole 81 out of 90 points had confidence of at least 99.9999% and half of those sat at an exact 100%. Just one single pair remained so stubborn that I decided to include a whole new attack just to break it. No matter which of the two attacks I used, Paul McCartney to Demi Moore always remained at "only" 99.9976%. Unacceptable, right? However, at least the stealthiness remained somewhat high at about 59%. Could I maybe trade some of that spare stealthiness for a little extra confidence?

The way I did it was by integrating an existing black-box method called Square Attack that is known to do well on some black-box attack tasks. This attack gets some budget for how much it is allowed to modify each pixel and then starts modifying square-shaped regions within this budget. Giving a large budget would reduce the stealthiness too much and giving a small budget would not improve the confidence but there was a sweet spot at which I obtained the following image.

Stealthiness: 50%

Success: True

Was the improvement of 0.0005% really worth spending several hours on this? Absolutely!

Conclusion

With these ideas, this code and over 300,000 API calls to the quietely suffering servers you too could reproduce my score of 89.999912. Ultimately though, I believe the final results are actually way too noticable in order to really pass as "adversarial examples". In some sense the adversarial attack has broken the stealthiness detector more so than the classifier itself. Defining a good stealthiness score and threshold for such a challenge is very difficult and is basically a major outstanding problem in robustness research (where very simple proxies are used). I would like to see a white-box version of this challenge where the model is completely known and the stealthiness threshold >99%.

I also want to thank the organizers of this challenge for hosting it and for always being so quick in answering questions. And if you are interested in seeing another approach to this challenge, check out the github repo of the team that scored second place in this challenge. Their solution is actually much more interesting as it uses so-called transfer attacks that need 100 times fewer queries to the attacked model by attacking their own local surrogate models.